Once you've written your code, it's a good idea to test that each variant behaves as you'd expect. If you find out your implementation had a bug after you've launched the experiment, you lose days of effort as the experiment results can no longer be trusted.

The best way to do this is adding an optional override to your release conditions. For example, you can create an override to assign a user to the test variant if their email is your own (or someone in your team). To do this:

Go to your experiment feature flag.

Ensure the feature flag is enabled by checking the "Enable feature flag" box.

Add a new condition set with the condition to

email = your_email@domain.com. Set the rollout percentage for this set to 100%.- In cases where

emailis not available (such as when your users are logged out), you can use a parameter likeutm_sourceand append?utm_source=your_variant_nameto your URL.

- In cases where

Set the optional override for the variant you'd like to assign these users to.

Click "Save".

Once you test it works, you're ready to launch your experiment.

Notes:

- The feature flag is activated only when you launch the experiment, or if you've manually checked the "Enable feature flag" box.

- While the PostHog toolbar enables you to toggle feature flags on and off, this only works for active feature flags and won't work for your experiment feature flag while it is still in draft mode.

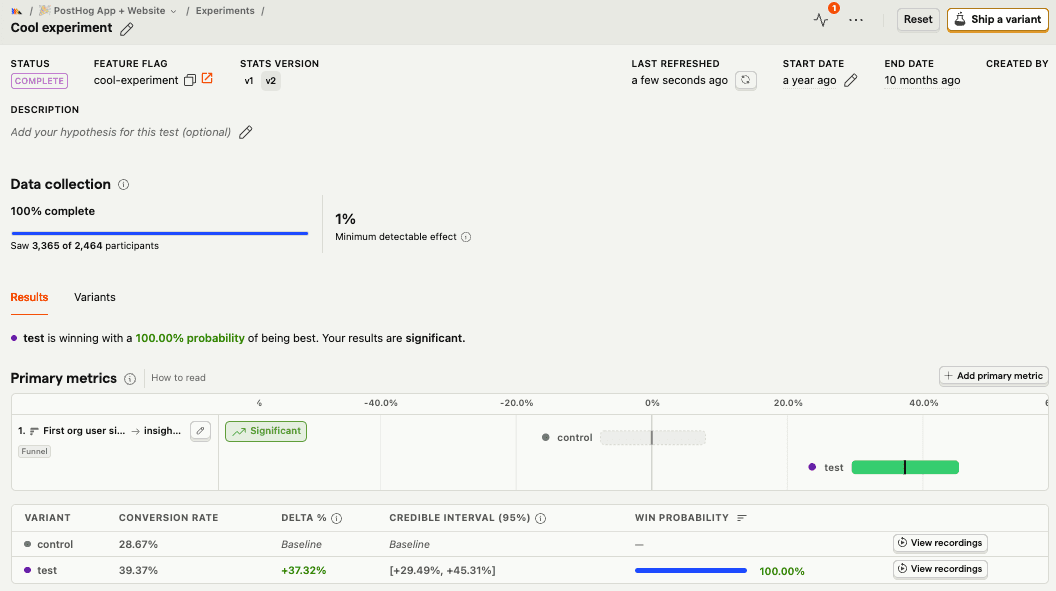

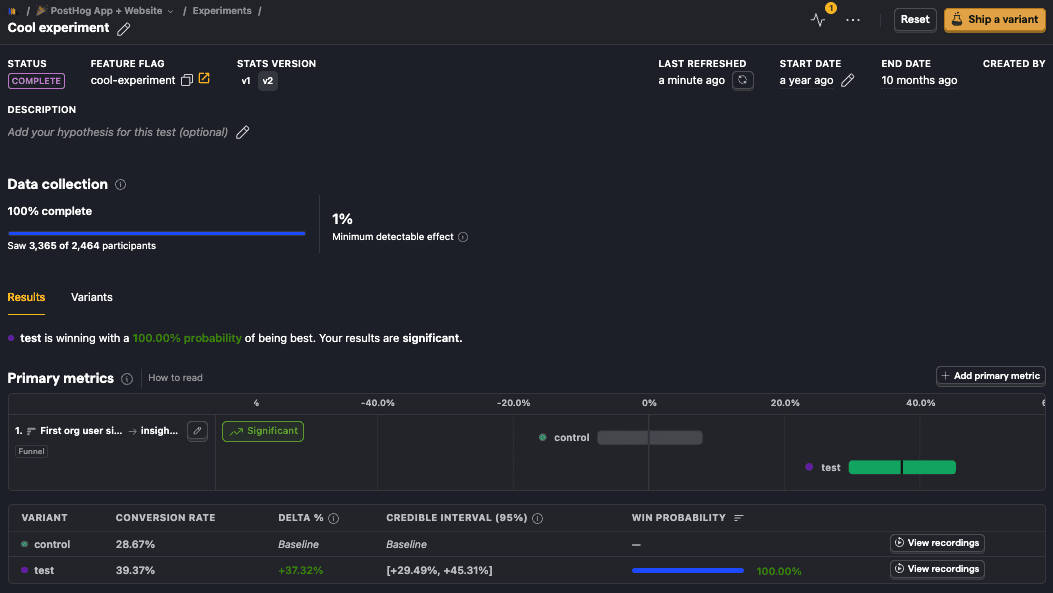

Viewing experiment results

While the experiment is running, you can see results on the details page:

This page shows:

- Status, whether draft, running, or complete as well as whether it has reached significance or not.

- Data collection progress based on an estimated target number of participants and minimum detectable effect.

- Win probability for each variant.

- Statistical significance for the results.

- Variants, their release conditions and distribution.

- Primary and secondary metrics.

Ending an experiment

After you've analyzed your experiment metrics and you're ready to end your experiment, you can click the Ship a variant button on the experiment page to roll out a variant and stop the experiment. This button only appears when the experiment has reached statistical significance.

If you want more precise control over your release, you can also set the release condition for the feature flag and stop the experiment manually.

Beyond this, we recommend:

Sharing the results with your team.

Documenting conclusions and findings in the description field your experiment. This helps preserve historical context for future team members.

Removing the experiment and losing variant's code.

Archiving the experiment.

Further reading

Want to learn more about how to run successful experiments in PostHog? Try these tutorials: